Testing Whether AI-Enhanced Collaboration Transforms Sprint Productivity

PROBLEM

Teams Waste 20% of Every Sprint on Scattered Tools and Reactive Planning

I watched a Scrum Master spend 20 minutes hunting for sprint blockers across Jira, Slack, and three different spreadsheets while her team waited in standup. Teams were jumping between five different tools just to understand basic sprint progress.

What really frustrated me more was watching them fly blind—estimating capacity with gut feelings, discovering critical blockers during stand-ups instead of when they happened, and constantly guessing whether sprints would actually finish on time. It felt absurd that AI could predict code bugs but teams were still playing guessing games with sprint delivery.

RESEARCH

Four Teams Revealed Why Sprint Management Teams Need AI Intelligence

I shadowed 4 teams across 2 companies during their actual sprint ceremonies and timed their context switches. The average team lost 65 minutes per day just navigating between tools and manually managing sprint overhead. Scrum Masters were playing detective, manually piecing together sprint health from scattered data.

But the bigger insight was watching teams miss patterns that AI could easily catch—capacity overruns that were predictable from past sprints, blockers that followed the same team dynamics every time, and sprint risks that emerged gradually but only got noticed during crisis moments. The moment that stuck with me was when an engineer said, "By the time I update everything, I've forgotten what I was actually building."

DAILY TIME BREAKDOWN

DAILY TOOL SWITCHING

LOST TIME

65 mins

Lost daily to manual overhead

SPRINT WASTE

30%

Of every sprint wasted on overhead

CONTEXT SWITCHING

55 x

Daily tools switches breaking focus

COMPETITIVE ANALYSIS

Competitors Offer Point Solutions, Not Unified Platforms

I analyzed major sprint planning tools to understand why teams were still struggling despite having options like Jira, ClickUp, and Monday. What I discovered was that every competitor solved pieces of the puzzle—Jira handled sprint boards well, ClickUp had decent project management, Monday offered good collaboration features—but none unified the entire workflow with intelligent assistance.

The bigger realization was that competitors were stuck in the old paradigm of building better point solutions rather than rethinking the fundamental problem. While they competed on features like better kanban boards or prettier interfaces, teams were still jumping between tools. This validated that my unified platform approach with AI intelligence wasn't just different—it was solving a problem no one else was addressing.

SprintView

Feature Coverage

6/7

Intergrated Video Calls

AI-Powered Planning

Real-time Health Monitoring

Spring Planning

Burndown Charts

Team Colloration

Advanced Reporting

Jira

Feature Coverage

4/7

Intergrated Video Calls

AI-Powered Planning

Real-time Health Monitoring

Spring Planning

Burndown Charts

Team Colloration

Advanced Reporting

ClickUp

Feature Coverage

4/7

Intergrated Video Calls

AI-Powered Planning

Real-time Health Monitoring

Spring Planning

Burndown Charts

Team Colloration

Advanced Reporting

STRATEGY

One Platform with Intelligence Everywhere

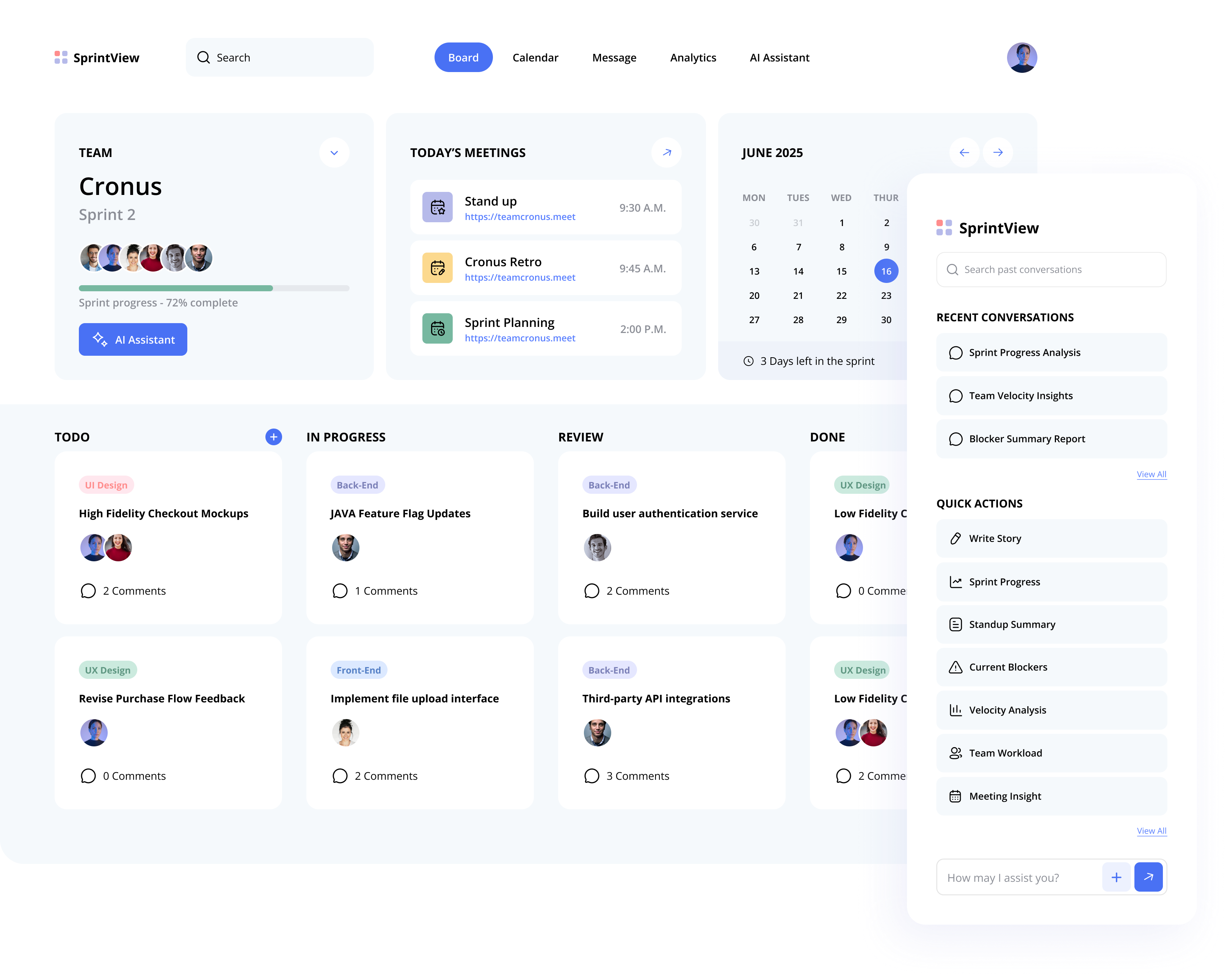

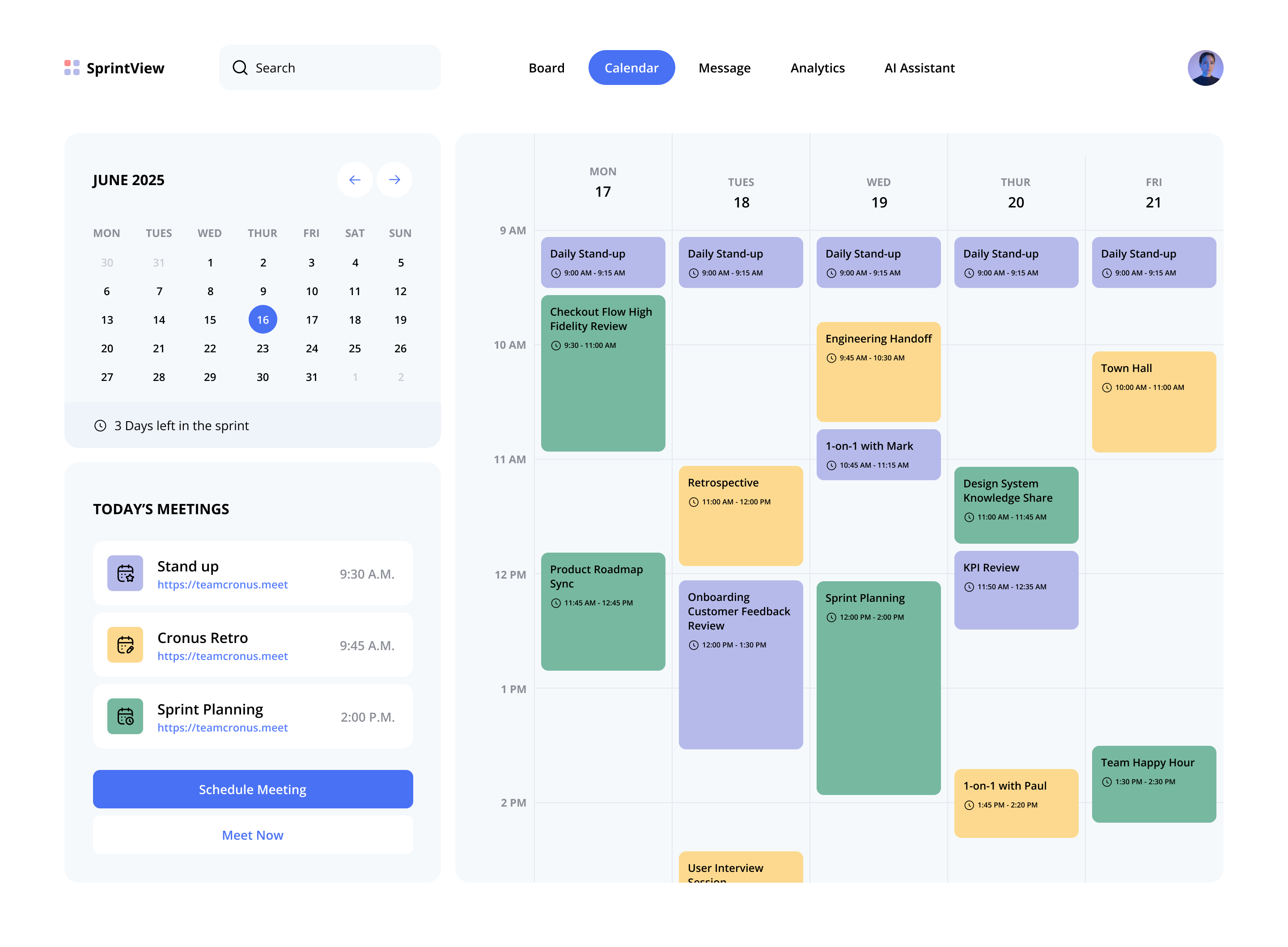

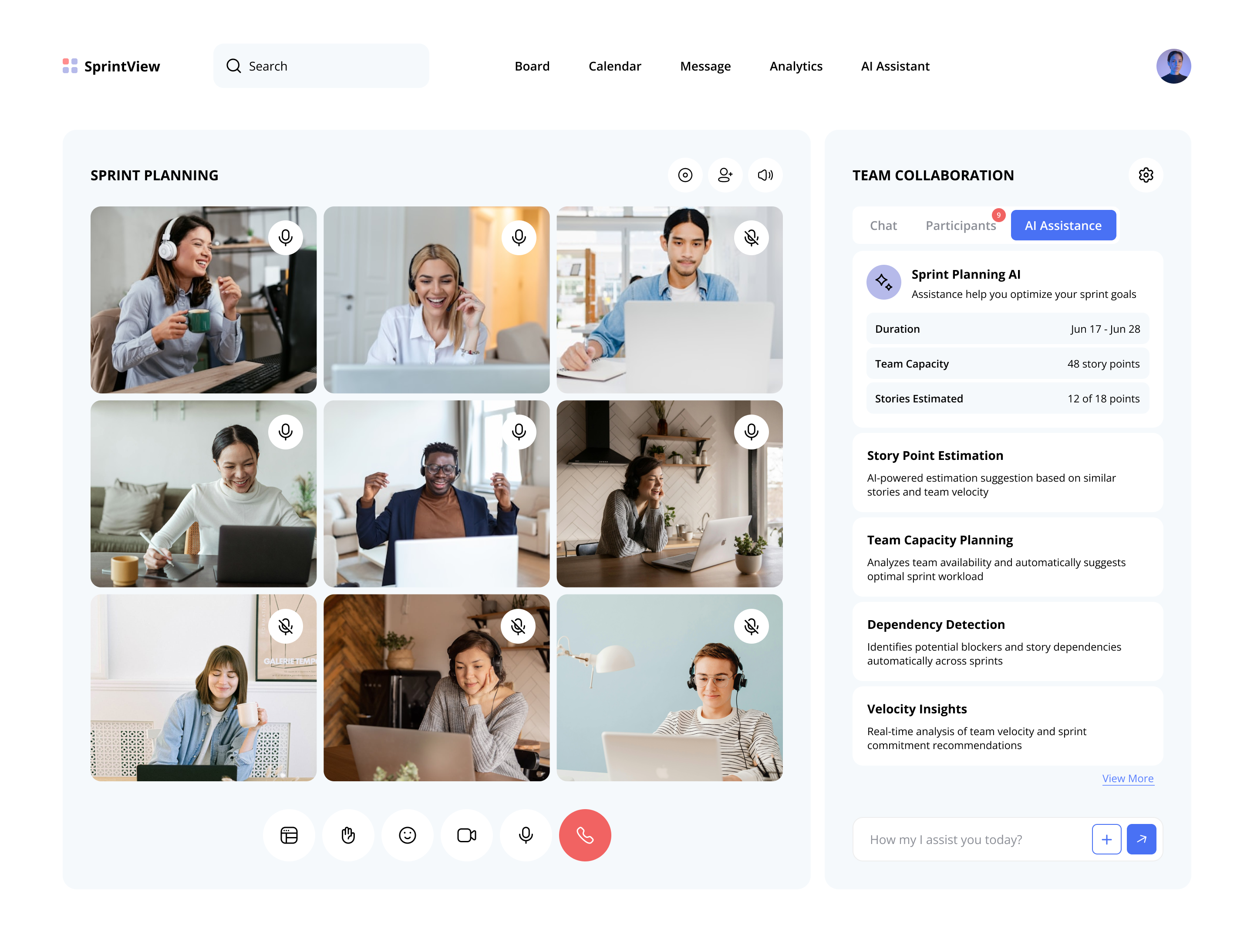

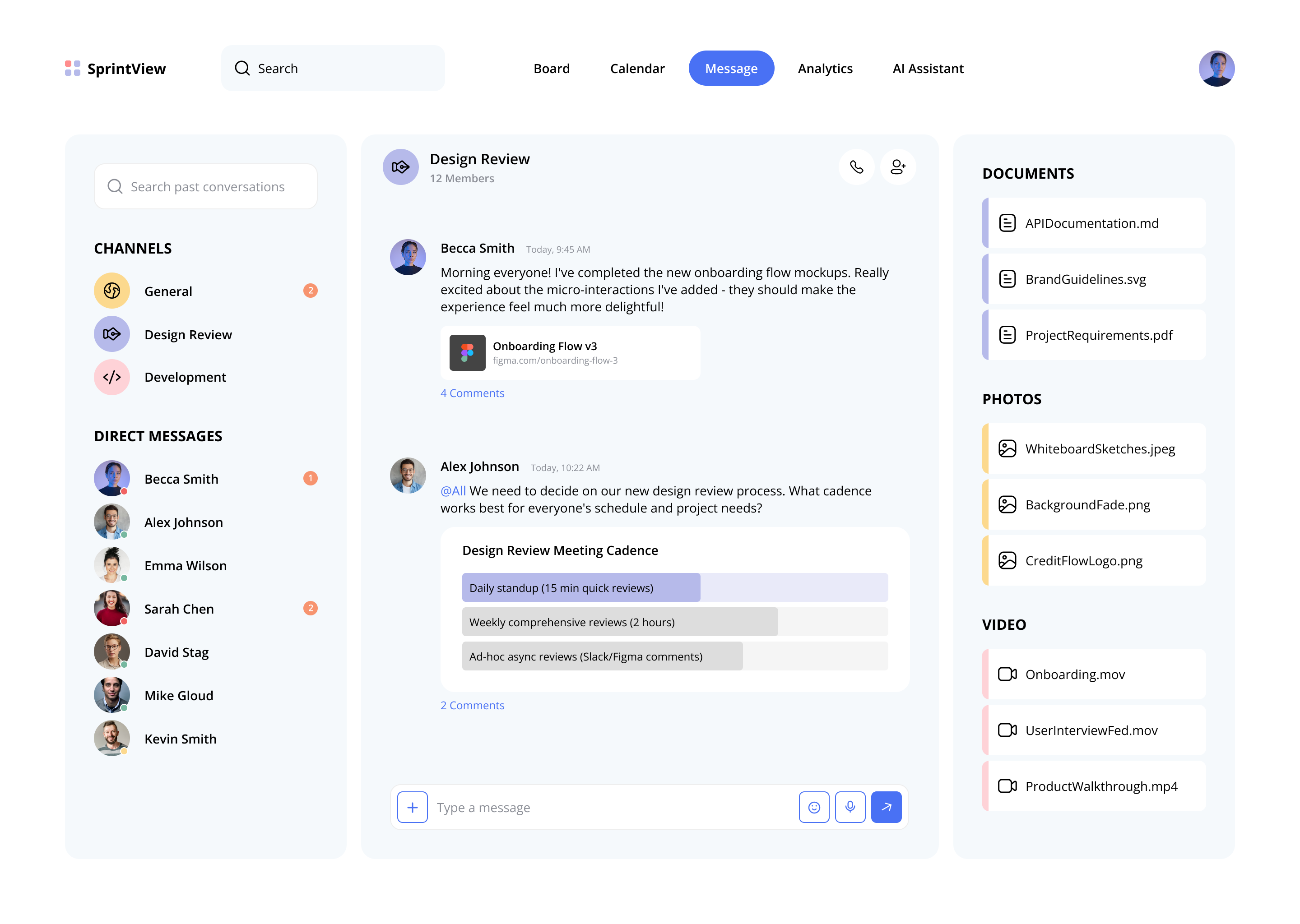

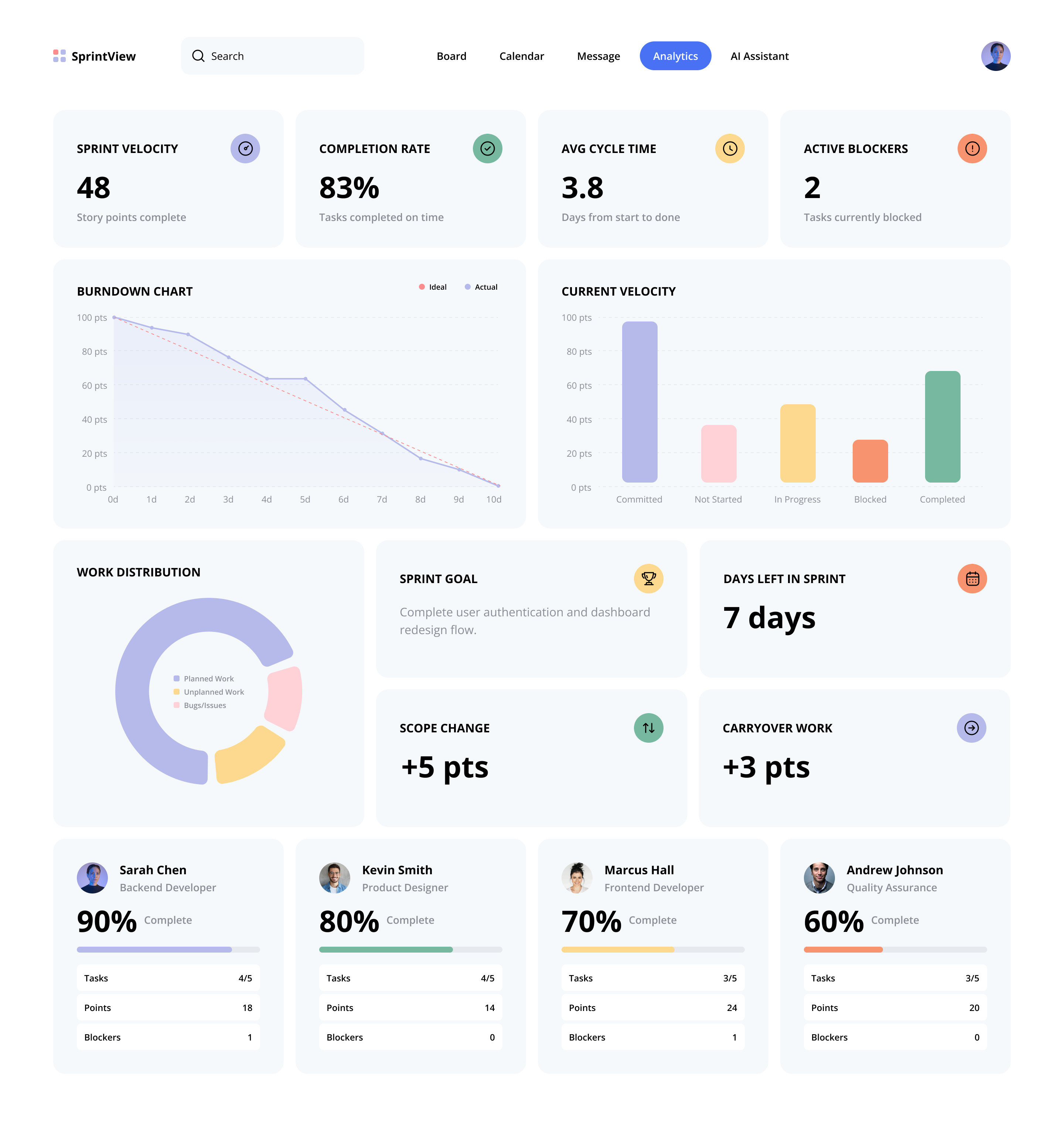

Instead of building another point solution, I designed a unified collaboration platform that brings sprint boards, video meetings, team chat, scheduling, and analytics into one intelligent workspace. Teams needed to stop jumping between tools to piece together their sprint reality.

The key insight: AI shouldn't be a separate assistant—it should be ambient intelligence that enhances every feature. From suggesting optimal meeting times based on team patterns to predicting sprint completion using historical velocity, intelligence should flow naturally through the entire platform rather than feel bolted on.

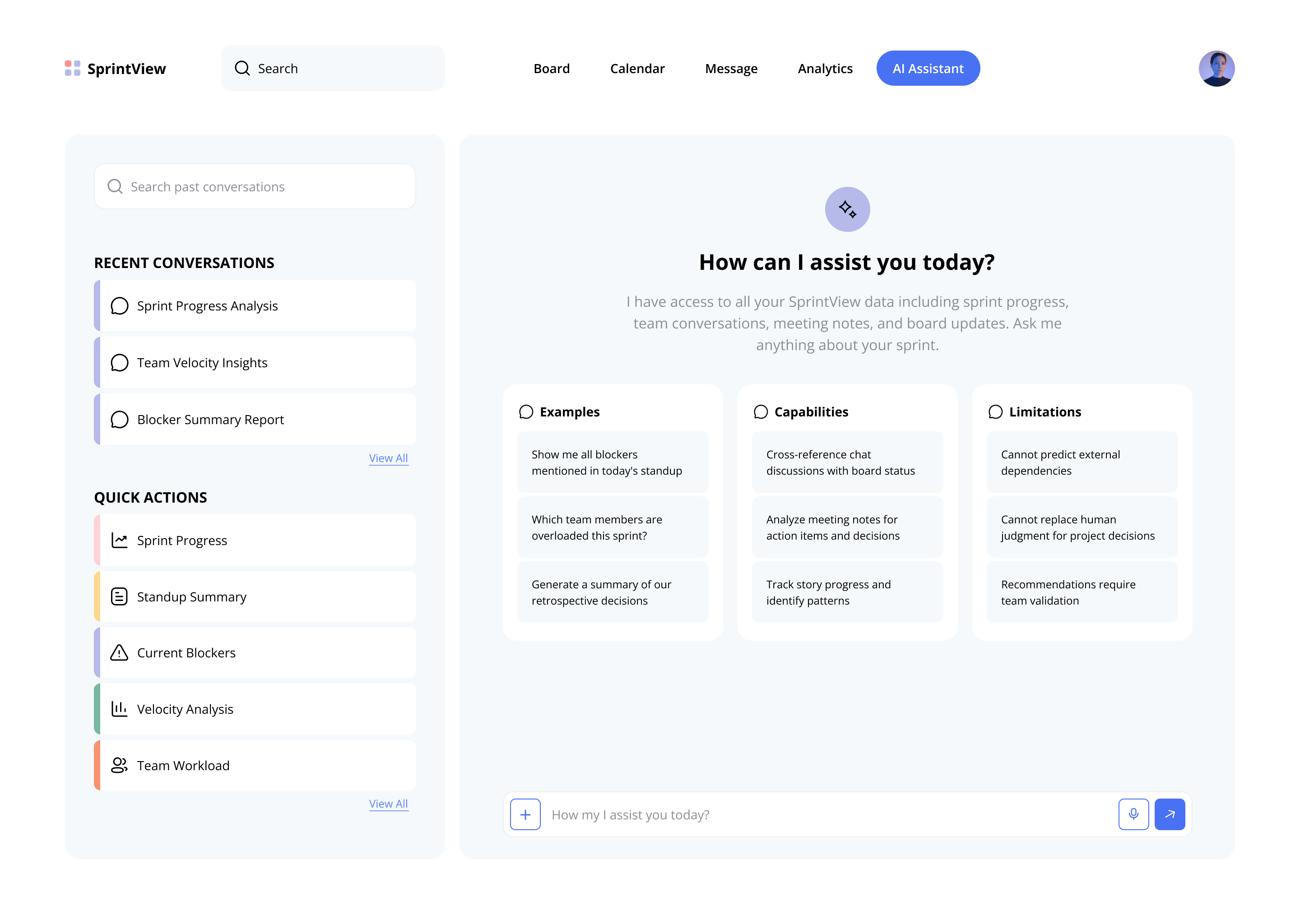

How can I assist you today?

I have access to all your SprintView data including sprint progress, team conversations, meeting notes, and board updates. Ask me anything about your Sprint.

Examples

Show me all blockers mentioned in today's standup

Which team members are overloaded this sprint?

Generate a summary of our retrospective decisions

Capabilities

Cross-reference chat discussions with board status

Analyze meeting notes for action items and decisions

Track story progress and identify patterns

Limitations

Cannot predict external dependencies outside your team's control

Cannot replace human judgment for complex project decisions

Recommendations require team validation before implementation

PROTOTYPE

Building and Testing the Unified Platform Concept

I'm building a working prototype to test whether eliminating tool switching actually solves the problems I observed. Rather than just theorizing about unified workflows, I need to put something real in front of teams and watch how they use it. The prototype brings together the core sprint activities—boards, meetings, chat, scheduling—into one workspace where I can test if intelligence truly emerges from connected data.

Each week, I'm testing new features with real teams and iterating based on their feedback. The big question isn't whether I can build these features, but whether teams actually change their behavior when the friction disappears. These testing sessions are revealing which assumptions hold true and which need to be rethought as I work toward a solution that genuinely transforms how teams collaborate during sprints.

RESULTS COMING SOON

Validating Impact Through Real Team Usage

Once the prototype testing is complete, I'll have concrete data on whether this unified approach actually delivers the productivity gains teams need. I'm tracking key metrics like time saved per sprint, reduction in context switching, and early blocker detection to validate the original hypothesis that tool fragmentation is costing teams significant overhead.

The real test will be seeing teams adopt new workflows naturally and reporting back that their sprints feel more predictable and less chaotic. These findings will determine the next phase of development and provide the evidence needed to scale this solution to more teams facing the same collaboration challenges.